How sensor technology will shape the cars of the future

By Vincent Hiligsmann, Melexis

Sensors are now a vital part of any modern automobile design, serving many different purposes. They are instrumental in helping car manufacturers to bring models to market that are safer, more fuel efficient and more comfortable to drive. Over time, sensors will also enable greater degrees of vehicle automation, which the industry will benefit from.

Intelligent observation

Besides full controllability and data processing, intelligent observability is one of the prerequisites to enable a car to take action on its own. To attain the objective of full observability cars will need to process a wide variety of parametric data - including speed, current, pressure, temperature, positioning, proximity detection, gesture recognition, etc. In terms of proximity detection and gesture recognition, great strides have been made over recent years, with ultrasonic sensors and time-of-flight (ToF) cameras now starting to be implemented into vehicles.

Besides full controllability and data processing, intelligent observability is one of the prerequisites to enable a car to take action on its own. To attain the objective of full observability cars will need to process a wide variety of parametric data - including speed, current, pressure, temperature, positioning, proximity detection, gesture recognition, etc. In terms of proximity detection and gesture recognition, great strides have been made over recent years, with ultrasonic sensors and time-of-flight (ToF) cameras now starting to be implemented into vehicles.

Ultrasonic sensors

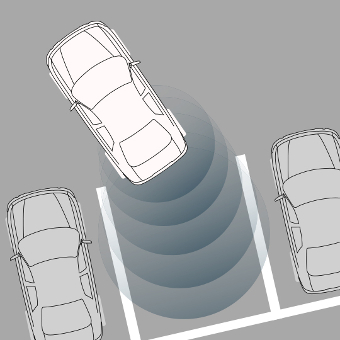

As automation in vehicles progresses, we are not only seeing new technologies being applied to the automotive sector for the first time, but we are also witnessing the adaptation of mature automotive technologies to the special requirements that autonomous driving will mandate. At the moment, ultrasonic sensors are typically mounted into vehicle bumpers for assisted parking systems. So far, such sensors are only expected to function at a driving speed of less than 10 km/hour and they are not able to measure small distances with 100% accuracy. In an autonomous car, however, such sensors could potentially be used in combination with radar, cameras and other sensing technologies to provide distance measuring functionality.

Gesture recognition

While ultrasonic sensor technology is used to observe the outside world, ToF cameras are focused on the car interior. As the transition to autonomous driving will be a gradual one, it is important that drivers can switch from autonomous mode back to manual mode in specific scenarios.

Currently cars are only partially autonomous, through use of their Advanced Driver Assistance Systems (ADAS) mechanisms, but human involvement can potentially be required at any moment. We expect the industry to advance towards greater levels of automation in the coming years, but even then the driver will still need to be able to take control in certain circumstances (e.g. when the car is in city centers). It will be a considerable length of time before this changes. Until that point a vehicle will need to be able to alert its driver. Therefore, real-time monitoring of the driver’s position and movements is crucial. Although still in its initial phases, ToF technology is already being employed today, such as to make drivers aware when they suffer a lapse in concentration and cause the vehicle to drift towards the edge of the road. It also enables different functions to be carried out based on gesture recognition - for example, via hand swipes to increase the radio volume or to answer an incoming phone call. The potential scope of ToF goes way beyond these sort of tasks, however, and it will be pivotal in more sophisticated driver automation as this is developed. ToF cameras will be able to map a driver’s entire upper body in 3D, so that it can be ascertained whether the driver’s head position is facing the road ahead and whether their hands are placed on the wheel.

Currently cars are only partially autonomous, through use of their Advanced Driver Assistance Systems (ADAS) mechanisms, but human involvement can potentially be required at any moment. We expect the industry to advance towards greater levels of automation in the coming years, but even then the driver will still need to be able to take control in certain circumstances (e.g. when the car is in city centers). It will be a considerable length of time before this changes. Until that point a vehicle will need to be able to alert its driver. Therefore, real-time monitoring of the driver’s position and movements is crucial. Although still in its initial phases, ToF technology is already being employed today, such as to make drivers aware when they suffer a lapse in concentration and cause the vehicle to drift towards the edge of the road. It also enables different functions to be carried out based on gesture recognition - for example, via hand swipes to increase the radio volume or to answer an incoming phone call. The potential scope of ToF goes way beyond these sort of tasks, however, and it will be pivotal in more sophisticated driver automation as this is developed. ToF cameras will be able to map a driver’s entire upper body in 3D, so that it can be ascertained whether the driver’s head position is facing the road ahead and whether their hands are placed on the wheel.

The next generation sensors that are now being developed will ultimately define the autonomous driving experience that is being envisaged.

3D mapping of the traffic situation

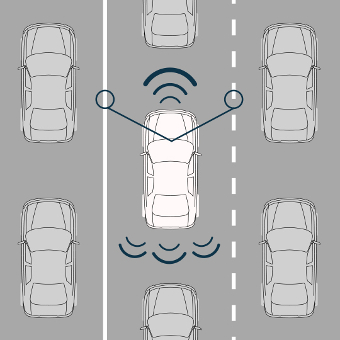

Today’s adaptive cruise control systems utilize radar to measure the distance to the vehicle in front. This technology performs well enough on motorways, but in an urban environment - where distances are shorter and pedestrians and/or vehicles can also approach from other directions - more precise position measurement is needed.

Today’s adaptive cruise control systems utilize radar to measure the distance to the vehicle in front. This technology performs well enough on motorways, but in an urban environment - where distances are shorter and pedestrians and/or vehicles can also approach from other directions - more precise position measurement is needed.

One solution is to add a camera for better determination of perspective. However, current image processing hardware is nowhere near good enough to detect all the important features with the necessary speed and reliability to ensure safe driving. This is where lidar seems destined to prove advantageous. Lidar works according to the same principle as radar and is based on measurement of the reflection of a transmitted signal. While radar relies on radio waves, lidar makes uses of light beams (e.g. laser). The distance to the object or surface is calculated by measuring the time that elapses between the transmission of a pulse and when a reflection of that pulse is received. The big advantage of lidar is that the technology enables much smaller objects to be detected than is possible with radar. In contrast to a camera, which views its environment in focal planes, lidar delivers an accurate, relatively detailed 3D rendering. Through this it is easy to isolate objects from what is in front of them or behind them, regardless of the lighting conditions (day or night). As the price points associated with lidar technology gradually lower, and further technological progression is made, the impetus for following this approach will increase.

The next generation sensors that are now being developed will ultimately define the autonomous driving experience that is being envisaged. Through innovation in the areas outlined in this insight, the cars of tomorrow will be providing a clear, constantly updated picture of what is happening, both in relation to the external environment and in terms of what their occupants are doing too. Sensing technologies therefore hold the key to the future of the automotive industry.